get in touch

Introduction

Big Data have great importance to businesses nowdays. You may ask yourself why do you need it. Well, you need it if you do not want to lose data from years ago to accept new data or you are simply on a quest of gathering more and more data for your newly decision making models. So now you have a huge volume of data that needs to be processed, but not only that, do not forget that this data needs to be moved from one place to another from time to time and all this in timely and most efficient way possible.

The Problem

This huge volume of data can not be stored nor processed using traditional approach. This is why there is such technology as Hadoop, this is open source framework meant for solving two mentioned issues. You will find a bunch of papers describing job scheduling, prioritizing, handling long running, short running jobs etc., but all this is in the scope of Hadoop itself. And this is where you are pretty much left to yourself.

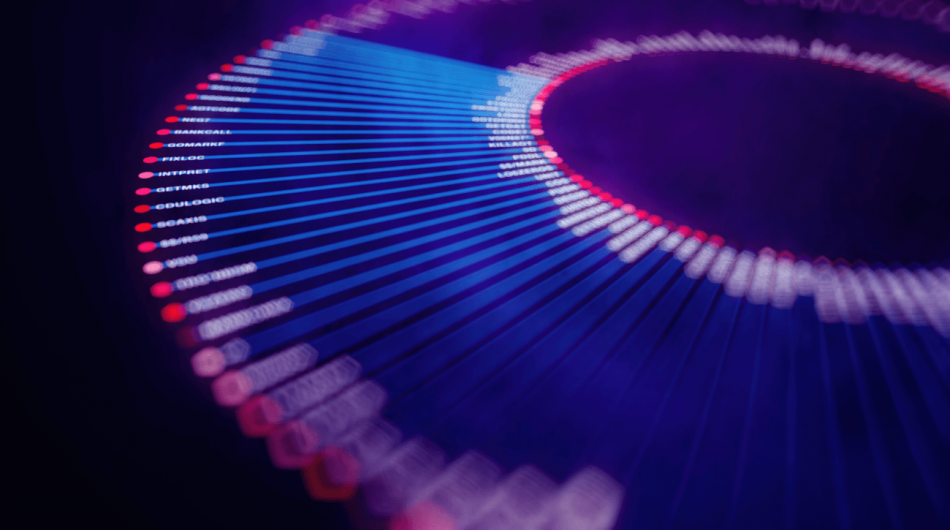

However, Hadoop itself does not know your business process model, which processes must go first, which are next, how many of these can run in parallel or where you should put your newly created process. This is where NEO DI FMWK comes in place. You can arrange whole picture peace by peace.

Imagine that you have some DWH data which requires heavy processing on Hadoop and your DWH is waiting for those results in order to load and present reports to end users. Using ETL tool you will develop process of loading data into Hadoop and trigger Hadoop processing, you will also develop process of loading results into your target database. AS of this point, you do not need to know where in the whole picture these two fits, all you need is to define them in NEO DI FMWK as individual processes with their attributes. Once automatic execution is set, they will go as soon as they have all prerequisites executed.

Another example could be Hadoop used as data stage. You can have a whole bunch of staging tables required for different enterprise models, using NEO DI FMWK, as soon as all required sources are loaded on Hadoop side for one process it can start it’s execution. Hadoop parallel processing can be well utilized.

At the end, you are able to monitor all this in graphic interface, one can determine if everything is going on as planned, are there any waiting events, what is current and estimated duration etc.

Conclusion

So, the question was Does NEO DI FMWK support Big Data technologies? NEO DI FMWK supports industry leading ETL tools, and as soon you are using one, you are covered. NEO DI FMWK has ability to read ETL repository metadata and once you have loaded metadata from Big Data, actually Hadoop, to be precise, you are able to develop process flow through ETL tool and interconnect Hadoop with Relational Databases. Now you can see really the whole picture from different perspective.

Architecture of DWH Group

• WH_SYS Scheme for the purpose of monitoring data load

• WH_ULD A scheme in which data from the interface is stored

• WH_CAT Schemes for storing various catalogs, codes (data not obtained from external sources)

• WH_DSA Data Staging Area

• WH_ODS Enterprise model

• WH_TGT Star schema

• GDW_SRC_1 Schema for submitting data for source 1

• GDW_SRC_2 Schema for submitting data for source 2

• GDW_SRC_3 Schema for submitting data for source 3

• GDW_SRC_. . . Schema for submitting data for source . . .

Using NEO ODI FMWK to automate data load from multiple different sources

The propagation of verified input datasets is ensured by the use of NEO ODI FMWK because it represents a natural upgrade to continue loading member data from the interface.

Once the interface data is properly verified, the route is continued through the WH_DSA (data staging area), WH_ODS (Enterprise model), WH_TGT, … with orchestration of NEO ODI FMWK.

NEO ODI FMWK signals have the ability to check different “custom” conditions and thus can signal the data readiness for each individual interface of a particular source.

NEO ODI FMWK has the built-in logic of calculating prerequisites and knows when the prerequisites for each process are met (e.g., the table will wait to load all the dimensional tables on which it depends).

NEO ODI FMWK gives an opportunity for one and the same process to be performed separately for each individually defined source (ease of maintenance as it is not necessary to create a special process for each source; If one source is delayed, the other can be loaded independently).

NEO ODI FMWK deletion agent automatically deletes the old data that has already been sent further into the system.